Post by : Anis Karim

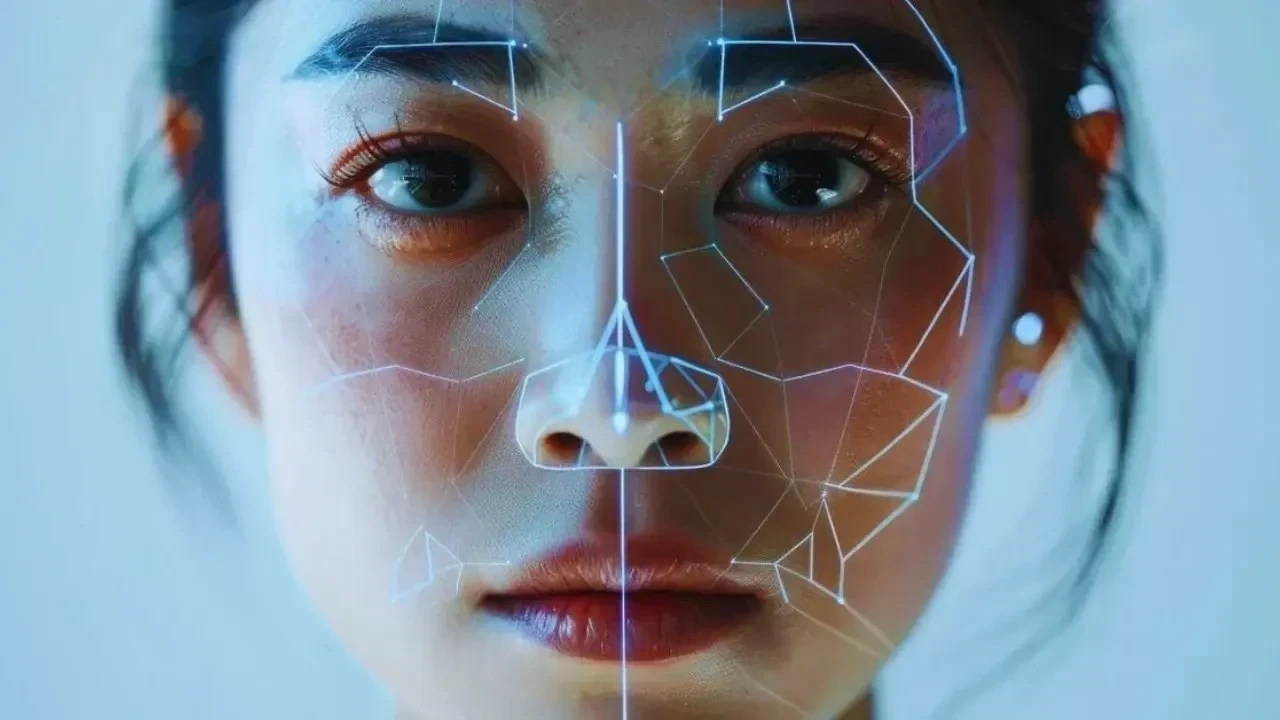

This week, a manipulated video spread rapidly across social platforms, sparking heated arguments, emotional reactions, and widespread confusion. For several hours, people debated its authenticity—some convinced it was real, others certain it had been tampered with. Only after digital experts clarified that it was, in fact, a deepfake did the conversation shift to something far more important:

Do everyday people know how to identify deepfakes before believing or sharing them?

The incident made one thing very clear—deepfakes are no longer rare or restricted to tech-savvy circles. They can emerge from any corner of the internet and spread faster than fact-checkers can intervene. And because they often feature familiar public figures, news-like settings, or emotionally charged moments, people fall for them easily.

This article breaks down, in simple and relatable terms, how anyone can detect deepfakes using practical steps. No technical background needed—just awareness, observation, and patience. And in a week where millions were misled for hours, these steps have become more essential than ever.

Before learning how to spot a deepfake, it’s important to understand what it actually is.

Deepfakes are synthetic videos created using artificial intelligence. They replace a real person’s face or voice with manipulated versions—making it appear as if someone said or did something they never actually did. Some deepfakes are amateurish and easy to spot; others are sophisticated enough to fool even sharp-eyed viewers.

They tend to spread during:

Heated political moments

Celebrity controversies

Breaking news cycles

Social-media trends

Emotional events

Because deepfakes tap into curiosity and shock value, they get shared instantly—bypassing skepticism.

But with the right awareness, anyone can learn to identify them.

This week’s viral incident highlighted the first major giveaway—the eyes felt “off.”

Deepfake creators still struggle to perfect:

Natural blinking

Eye movement that matches head movement

Light reflections on the cornea

Smooth tracking

Watch carefully for:

Long stretches without blinking

Rapid, unnatural blinking

Eyes that don’t focus on anything

Pupils that look flat or “pasted on”

Humans rarely keep their eyes perfectly static—deepfakes often do.

Lip-syncing is another area where deepfakes slip.

Look for:

Words that don’t align with mouth shapes

Delayed lip movement

Stiff jaw motions

Teeth that look smudged or unnaturally bright

Too-smooth or rubbery lips

This week’s deepfake incident showed slight lag around the mouth—an early sign many viewers missed.

Lighting on the edges of the face is often inconsistent in deepfakes.

Check the:

Jawline

Hairline

Neck area

Ears

If any of these appear:

Blurry

Flickering

Sharper than the rest

Slightly displaced

Surrounded by mismatched lighting

…it’s likely fake.

Deepfake overlays often fail at blending hair strands, shadow angles, and skin transitions perfectly.

Human skin contains:

Pores

Lines

Shine variation

Tiny imperfections

Deepfakes often look:

Too smooth

Too airbrushed

Too uniform

Inconsistent under changing light

A “plastic” or “CGI” look is a strong indicator.

Deepfakes sometimes make heads:

Tilt in unnatural angles

Move differently from shoulders

Pivot too smoothly or too sharply

If the head looks slightly disconnected from the body—almost floating—it’s a red flag.

Humans express emotions with:

Micro-expressions

Muscle tightening

Forehead creases

Eye narrowing

Deepfakes struggle to replicate these subtle shifts.

If the emotional tone of the voice doesn’t match the face—or the person seems “emotionally flat”—your suspicion should rise.

Deepfake creators often only manipulate the face, not the rest of the body.

Look for:

Natural arm movement

Posture consistency

Hand gestures matching speech patterns

Reflex reactions

If the body looks stiff or the gestures feel mismatched, the facial overlay may be artificial.

A major giveaway in many deepfakes is incorrect lighting.

Compare:

Shadows on the face

Shadows in the room

Light direction

Reflections

If the lighting on the face doesn’t match the environment, the video was modified.

Pause the video and watch frame by frame (if possible).

Common artifacts include:

Glitches around the mouth

Melting edges

Flickering pixels

Ghost-like outlines

Color shifts

Even advanced deepfakes occasionally leave such traces.

Deepfake voices often:

Miss emotional tone

Have flat pitch

Lack breathing sounds

Feel robotic when sentences shift

Sound disconnected from room acoustics

If the voice feels overly clean, monotone, or strangely synthetic, question it.

Bonus tip:

If the voice sounds real but the mouth doesn’t match—it’s almost certainly a manipulation.

Creators often focus on the face and forget the surroundings.

Watch the:

Background blur

Object movement

Shadow consistency

Reflections

If background elements warp or move unnaturally when the person moves, it’s suspicious.

Even if the video looks real, always cross-check:

Did any credible source report it?

Has the person or official representative commented?

Is the video contextually believable?

Are multiple versions circulating?

Does the clip feel intentionally dramatic or divisive?

Deepfakes thrive on emotional triggers.

This week’s incident spread because people reacted first and verified later—the exact trap deepfake creators rely on.

Deepfakes are engineered to provoke reactions before rational thought kicks in.

Timing is often strategic.

Deepfakes exploit familiarity to amplify confusion.

Compression inconsistencies are major hints.

You don’t need to be an expert—just aware.

People this week used simple online tools to detect anomalies:

Reverse video search

Frame-by-frame scrubbing

Audio analysis apps

Metadata checkers

Slow-motion playback

These tools don’t guarantee accuracy, but they help identify suspicious elements.

Deepfake technology is getting better every month. While detection tools improve, so do manipulation techniques.

This week proved that even a moderately convincing deepfake can:

Damage reputations

Trigger arguments

Influence public opinion

Spread misinformation

Manipulate emotions

Generate panic or outrage

Being able to identify deepfakes is no longer optional—it's part of digital survival.

Always wait for credible verification

Avoid sharing emotionally charged videos instantly

Train your eye to spot inconsistencies

Educate friends and family who may be less aware

Follow official channels for clarification

Stay updated on common manipulation techniques

Digital literacy is a community responsibility—not an individual one.

This week’s circulating deepfake incident wasn’t just another viral moment—it was a wake-up call. It showed how easily manipulated videos can infiltrate public conversations, influence emotions, and shape narratives in minutes. But it also showed that awareness spreads just as quickly.

By learning to spot deepfakes through small visual cues, background inconsistencies, emotional mismatch, and simple verification steps, everyday people can protect themselves—and others—from falling into misinformation traps.

Deepfakes will keep evolving, but so will human awareness. And the more we question, observe, and pause before reacting, the stronger our digital immunity becomes.

This article is for general informational purposes only. Deepfake detection may require professional tools in complex cases. Always verify sensitive content with credible sources before drawing conclusions.

10 Songs That Carry the Same Grit and Realness as Banda Kaam Ka by Chaar Diwari

From underground hip hop to introspective rap here are ten songs that carry the same gritty realisti

PPG and JAFZA Launch Major Tree-Planting Drive for Sustainability

PPG teams up with JAFZA to plant 500 native trees, enhancing green spaces, biodiversity, and air qua

Dubai Welcomes Russia’s Largest Plastic Surgery Team

Russia’s largest plastic surgery team launches a new hub at Fayy Health, bringing world-class aesthe

The Art of Negotiation

Negotiation is more than deal making. It is a life skill that shapes business success leadership dec

Hong Kong Dragon Boat Challenge 2026 Makes Global Debut in Dubai

Dubai successfully hosted the world’s first Hong Kong dragon boat races of 2026, blending sport, cul

Ghanem Launches Regulated Fractional Property Ownership in KSA

Ghanem introduces regulated fractional real estate ownership in Saudi Arabia under REGA Sandbox, ena

Why Drinking Soaked Chia Seeds Water With Lemon and Honey Before Breakfast Matters

Drinking soaked chia seeds water with lemon and honey before breakfast may support digestion hydrati

Why Drinking Soaked Chia Seeds Water With Lemon and Honey Before Breakfast Matters

Drinking soaked chia seeds water with lemon and honey before breakfast may support digestion hydrati

Morning Walk vs Evening Walk: Which Helps You Lose More Weight?

Morning or evening walk Learn how both help with weight loss and which walking time suits your body

What Really Happens When You Drink Lemon Turmeric Water Daily

Discover what happens to your body when you drink lemon turmeric water daily including digestion imm

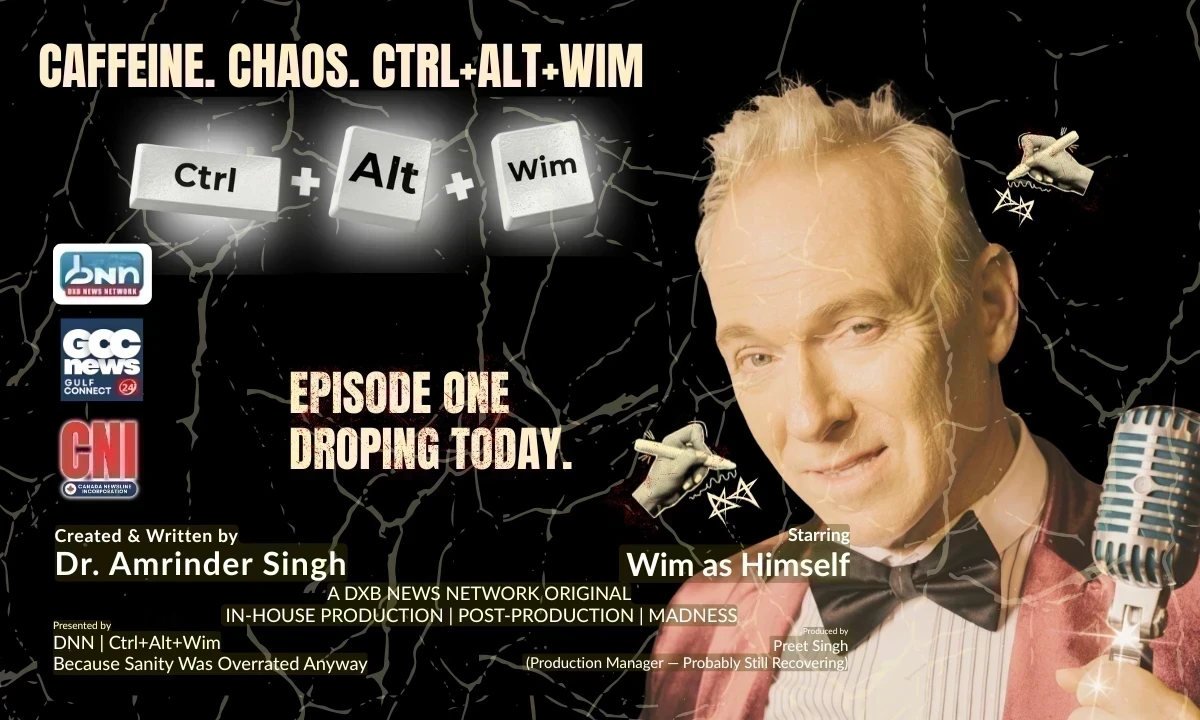

DXB News Network Presents “Ctrl+Alt+Wim”, A Bold New Satirical Series Starring Global Entertainer Wim Hoste

DXB News Network premieres Ctrl+Alt+Wim, a bold new satirical micro‑series starring global entertain

High Heart Rate? 10 Common Causes and 10 Natural Ways to Lower It

Learn why heart rate rises and how to lower it naturally with simple habits healthy food calm routin

10 Simple Natural Remedies That Bring Out Your Skin’s Natural Glow

Discover simple natural remedies for glowing skin Easy daily habits clean care and healthy living ti

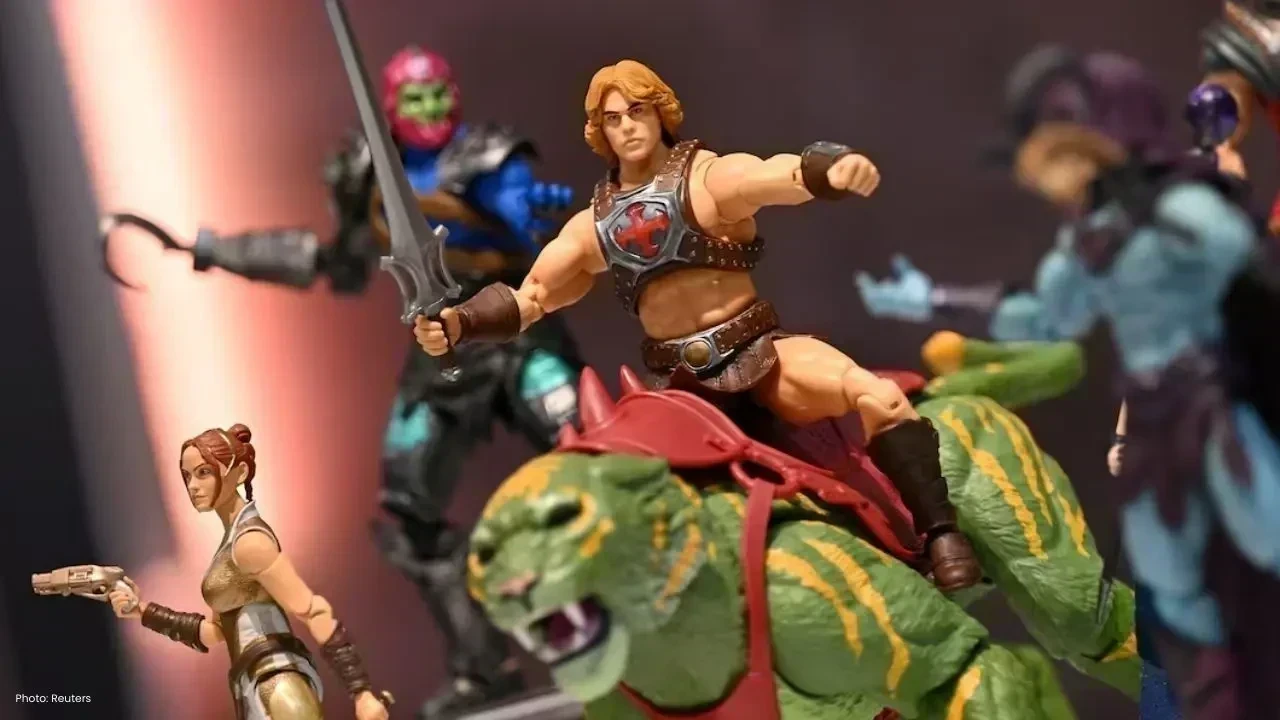

Mattel Revamps Masters of the Universe Action Figures for Upcoming Film

Mattel is set to revive Masters of the Universe action figures in sync with their new movie, ignitin

China Executes 11 Members of Infamous Ming Family Behind Myanmar Scam Operations

China has executed 11 Ming family members, linked to extensive scams and gambling in Myanmar, causin